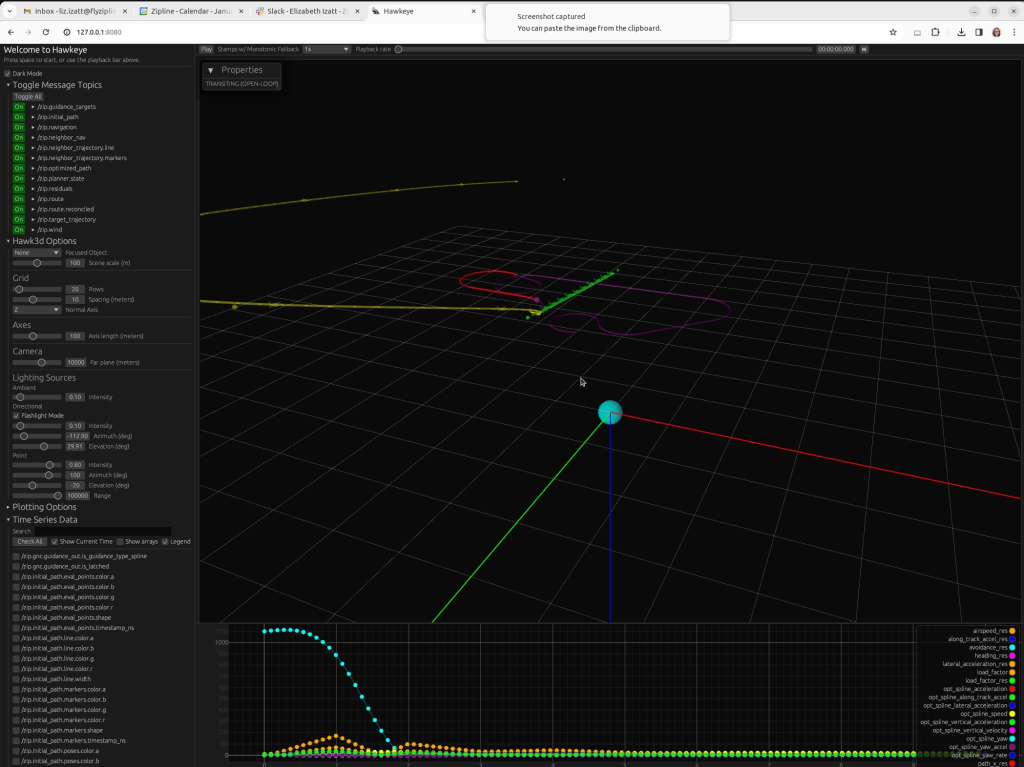

At Zipline, in addition to contributing to the simulation team’s integration efforts I became an owner of the Hawkeye visualization application. It was written in Rust, integrating with some of the same libraries as flight code, and run with cargo natively. It depended on marker messages being present in the IPC logs it visualized for each object to be rendered, similar to Xviz, thus necessitating that either flight code or the simulator or a postprocessing script add those marker messages to the logs. The original creators of Hawkeye, the GNC team, had a postprocessing script as part of their pipeline so this worked naturally for them. But ultimately more people wanted to use it, at the same time the simulator my team was responsible for was gaining traction.

The first addition I made was having the simulator parse ground truth data while running and optionally publish Hawkeye marker messages. This gave sim users working viz by default, assuming they could run Hawkeye.

That assumption however was proving challenging as the company increasingly moved to EC2 based devboxes. The creator of Hawkeye and I sat down, and he showed me a prototype he’d gotten about 70% of the way done to compile Hawkeye to a WebAssembly binary, then serve it as a Javascript-powered frontend via a handy library called Trunk.

I took up that prototype and got it compiling by making some tweaks to the build dependency tree, excluding libraries used for video playback that we would not be able to use on the web. Then once it worked locally, I worked with a devops engineer to deploy the app via a Kubernetes wrapper the company had developed in house. After a lot of trial and error and learning as someone with not much cloud experience, we got the permissions and roles worked out, and the app deployed and available to everyone on the Zipline VPN via hawkeye.flyzipline.com (and hawkeye-staging.flyzipline.com and hawkeye.flyziplinedev.com, for deployment and testing…)

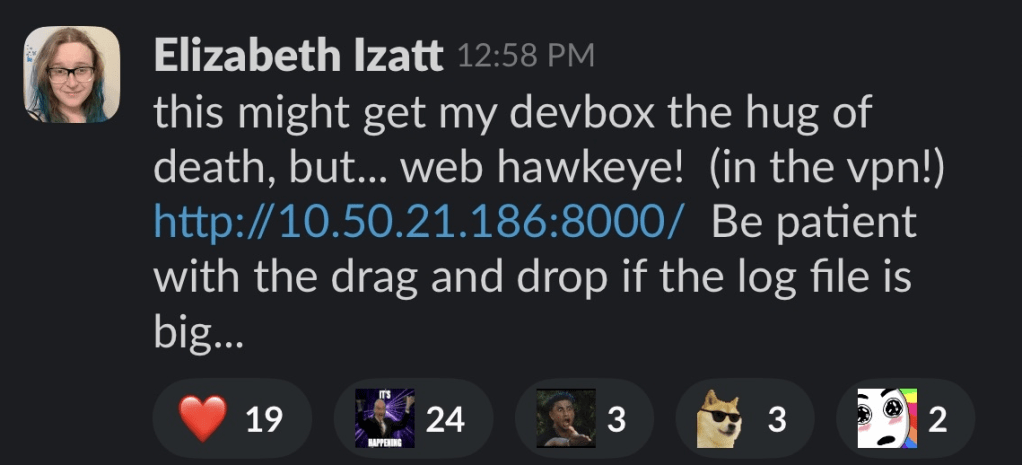

Before deploying to hawkeye.flyzipline.com, I let people try it hosted by my devbox… this did not go horribly wrong somehow

After that there was always more work to be done to pull in more features to web, or create new ones.

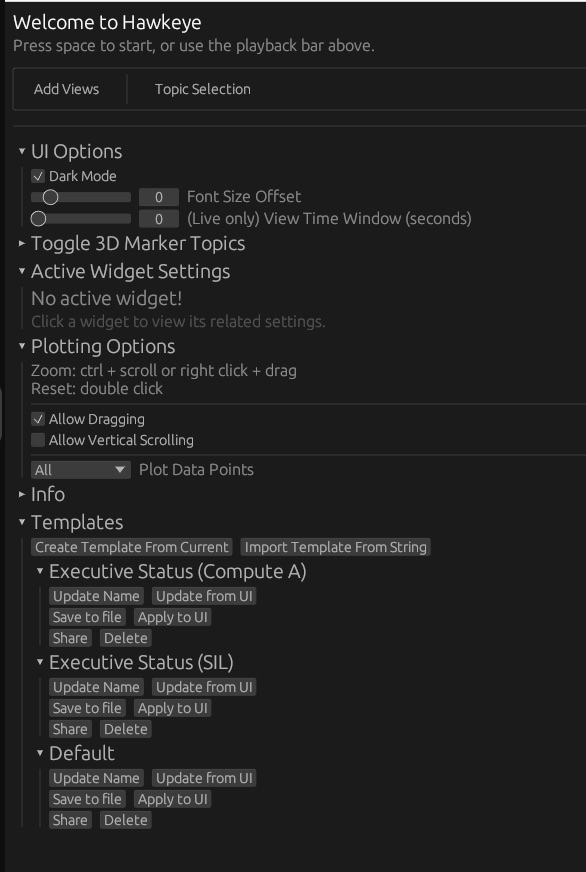

A partner joined me on the Hawkeye project and helped enable both video in web, and refactored so the UI was modular and rearrangable. Building on that, I created a templating system that would allow you to save UI configurations, persist them between sessions, and share them via a raw string or a file.

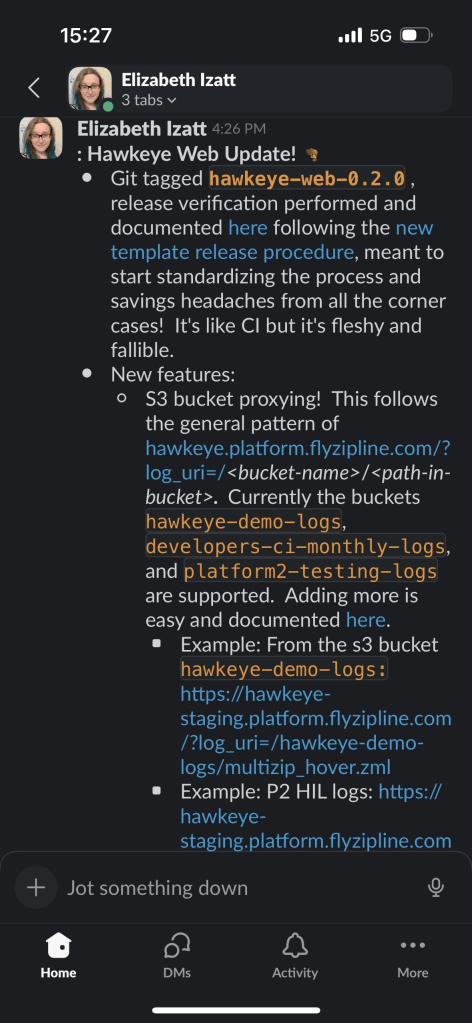

Since all of our logs were ending up on S3 as part of the data injest process, I also enabled proxying to these files so we could put links in our CI output directly to visualize the log from a given run.